If your website isn’t being crawled and indexed effectively, your SEO strategy is dead on arrival. You could have world-class content and a beautiful design, but if search engines can’t reach it, it won’t show up in search results—period. Maximizing website crawlability and indexing isn’t just a technical task to check off a list. It’s a foundational element of your organic strategy that can dramatically impact how (and if) your content performs.

In this article, I’ll walk through the practical steps to improve website crawlability and indexing, from the basics to advanced techniques. Whether you’re a solo marketer, a digital marketing consultant, or working within a large enterprise, these steps will help you ensure your content is discoverable, indexable, and ready to rank.

What Is Website Crawlability and Indexing?

Before we dive in, let’s define the terms. Crawlability refers to a search engine’s ability to access and navigate your website’s pages using bots (also called crawlers or spiders). Indexing is what happens after a page is successfully crawled; it’s when the search engine stores the content in its database, making it eligible to appear in search results.

So if Google can’t crawl your pages, they won’t be indexed. And if they’re not indexed, they’re invisible.

Why Crawlability and Indexing Matter

Too many businesses focus on publishing content but forget about the critical infrastructure that makes that content discoverable. Crawl budget limitations, broken links, and incorrect meta directives can all act as roadblocks. If even 10 percent of your site is uncrawlable or misconfigured, it can create a ripple effect across your SEO performance.

When you improve website crawlability and indexing, you:

- Increase the likelihood your pages appear in SERPs

- Improve ranking potential for new content

- Ensure your site updates are reflected quickly

- Avoid duplicate content and redirect issues

Let’s break down the steps to get this right.

Step 1: Optimize Your Site Structure

Crawlability starts with a logical, clean site structure. This means:

- Keep your most important content within three clicks of the homepage

- Use a clear hierarchy (Homepage > Category > Subcategory > Page)

- Link top-performing pages throughout the site

- Avoid orphan pages—every page should be linked to from somewhere

A flat, well-organized structure helps crawlers navigate your content efficiently and ensures that no page gets left behind.

Step 2: Create and Submit XML Sitemaps

Your XML sitemap is a roadmap for search engines. It lists all important pages and signals which ones should be crawled and indexed.

Best practices:

- Keep it updated automatically (your CMS or SEO plugin can help)

- Submit it via Google Search Console and Bing Webmaster Tools

- Use separate sitemaps for different content types (blog posts, product pages, videos)

- Ensure it doesn’t include redirects or 404s

Sitemaps are especially helpful for larger sites with deeper architecture or frequently updated content.

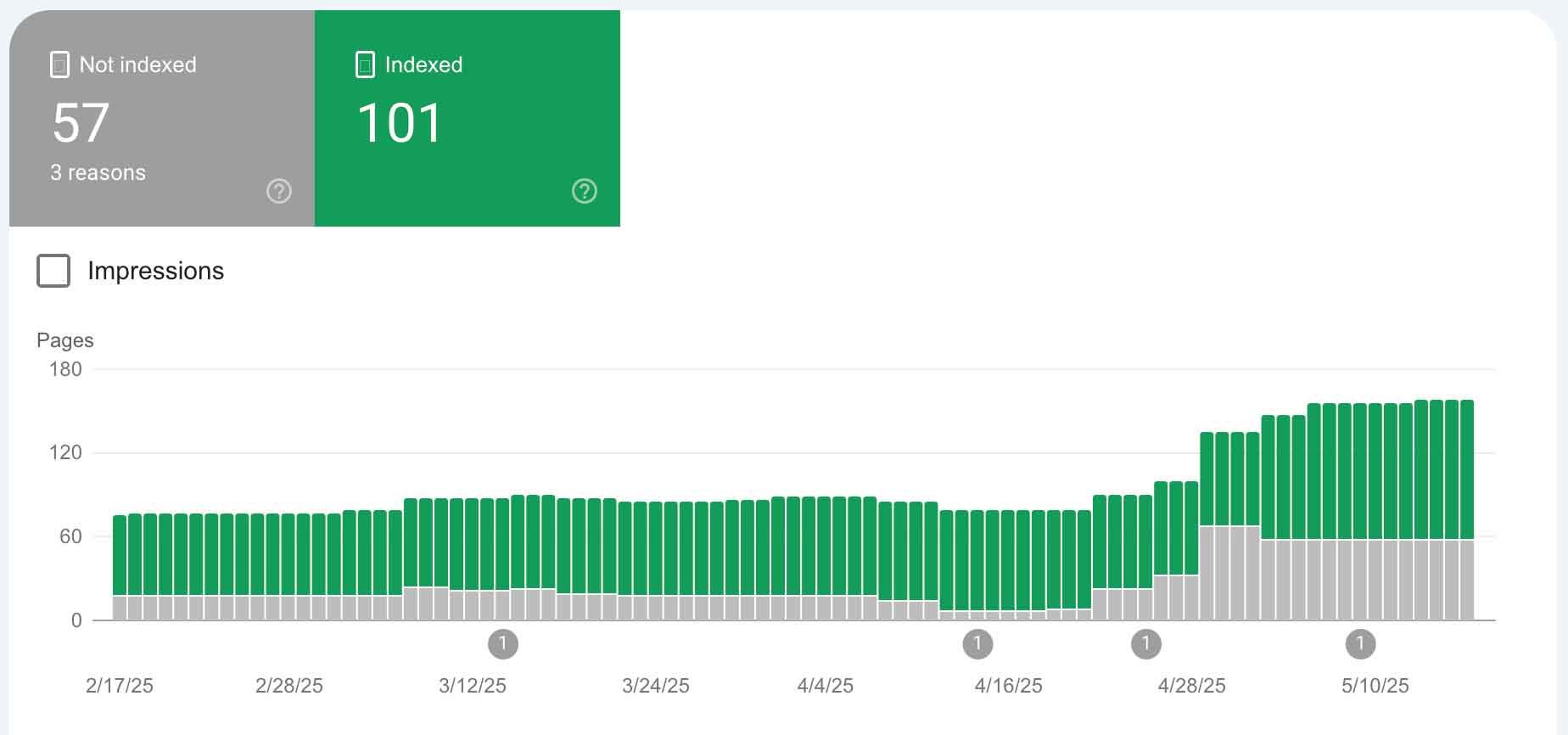

Step 3: Fix Crawl Errors in Google Search Console

One of the best ways to improve website crawlability and indexing is to regularly audit Google Search Console.

Focus on:

- “Pages not indexed” in the Index Coverage report

- Server errors (5xx), soft 404s, and redirect loops

- Excluded pages with “noindex” tags or blocked by robots.txt

Resolve issues like:

- Removing outdated or unnecessary “noindex” tags

- Updating internal links that point to 404 pages

- Ensuring your robots.txt doesn’t block critical directories

Make this a monthly check-in. Small issues can compound quickly!

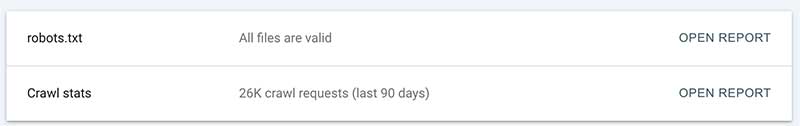

Step 4: Avoid Common Robots.txt Mistakes

Your robots.txt file tells search engine bots where they can and cannot go. Misconfiguring this file is one of the most common crawlability errors I see.

Quick tips:

- Don’t block your entire site with

Disallow: / - Allow access to important resources like JavaScript and CSS

- Only disallow folders that truly shouldn’t be indexed (like admin panels or staging environments)

If you’re unsure, test your robots.txt using Google’s Robots Testing Tool.

Step 5: Use Internal Linking Strategically

Internal links serve two major functions: they distribute link equity (ranking power) and guide crawlers through your site.

To boost crawlability:

- Use keyword-rich anchor text where appropriate

- Link from high-authority pages to newly published or underperforming content

- Build hub pages that link out to related resources and posts

Smart internal linking is one of the most underrated SEO strategies. It strengthens the crawl path and helps search engines understand context.

Step 6: Eliminate Duplicate Content

Duplicate content confuses search engines and can result in indexing issues; or worse, your intended page might not rank at all.

To address this:

- Use canonical tags (

<link rel="canonical">) to specify the preferred version of a page - Avoid session IDs and tracking parameters in URLs that cause duplication

- Consolidate similar content and redirect where necessary

Canonicalization gives you control over what gets indexed, ensuring that your SEO equity isn’t diluted.

Step 7: Leverage Structured Data

While structured data doesn’t directly influence crawlability, it enhances how your indexed content appears in search.

- Helps search engines better understand your content

- Enables rich results (like FAQs, reviews, breadcrumbs)

- Can increase click-through rates

Implementing structured data makes your indexed content more compelling and actionable for users—an indirect boost to organic performance.

Step 8: Monitor Crawl Stats and Logs

In Google Search Console’s Crawl Stats report, look for:

- Total crawl requests

- Crawl responses (errors vs successful requests)

- File types crawled

- Crawl frequency by response time

You can also review your server logs for a more technical view of how search bots are interacting with your site.

If crawl frequency drops, it’s often a sign of larger issues. Stay on top of these signals to proactively manage your site health.

Step 9: Improve Site Speed and Core Web Vitals

Slow-loading pages can hurt crawl efficiency. If your site is sluggish, bots may time out before crawling all of your pages.

Focus on:

- Compressing images and using next-gen formats

- Implementing lazy loading for below-the-fold content

- Reducing JavaScript bloat and unused code

- Minimizing third-party script calls

Better speed = better crawlability and user experience.

Step 10: Prioritize Mobile-First Indexing

Since Google moved to mobile-first indexing, your mobile version is now the primary one used for crawling and ranking.

To prepare:

- Ensure mobile and desktop versions have consistent content

- Use responsive design

- Avoid mobile-specific URLs (like m.example.com)

- Test your mobile usability in Google Search Console

Mobile parity isn’t optional anymore; it’s the baseline.

Final Thoughts

Maximizing website crawlability and indexing doesn’t require guesswork, it requires attention to detail, a solid technical foundation, and regular audits. By making it easier for search engines to access and understand your content, you dramatically improve your chances of ranking.

If you’re investing in content, you owe it to yourself to ensure it’s getting indexed—and that means removing any and all roadblocks for search engines.

Want help with an SEO audit or crawlability review?

Connect with me on LinkedIn or contact me for a free consultation.

Let’s make sure your content isn’t just written!